1.请求转发

比如说我要将127.0.0.1/topics上的所有请求转发到xxx:xxx/上

修改 sudo vim /etc/nginx/nginx.conf

1 | server { |

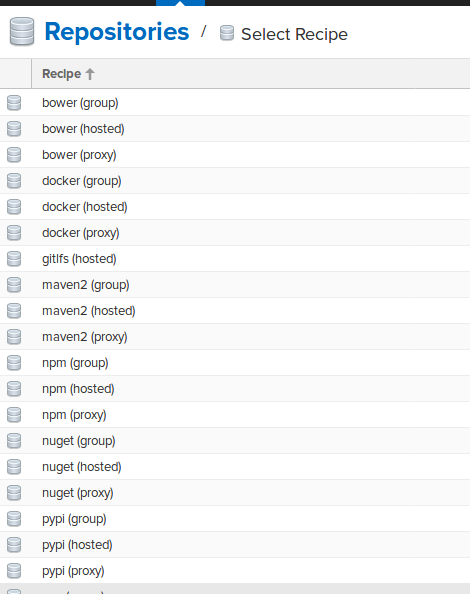

2.代理前端和后端服务

3.Nginx使用本地缓存

4.代理

1.正向代理和反向代理

正向代理:代理服务器位于客户端和目标服务器之间,代表客户端向目标服务器发送请求。客户端需要配置代理服务器。

用途: 访问控制、内容过滤、匿名浏览、缓存。我们用来kexue上网的工具就属于正向代理。

反向代理:代理服务器位于客户端和目标服务器之间,代表服务器处理客户端请求。客户端不知道反向代理的存在。

用途: 负载均衡、安全防护、缓存、SSL加速。比如使用Nginx,HAProxy,Apache HTTP Server等作为反向代理。

2.透明代理和非透明代理

透明代理和非透明代理都是正向代理,位于客户端和目标服务器之间,代理客户端向目标服务器发送请求。

透明代理:网络管理员在网络边界部署透明代理来过滤不良内容。用户浏览网站时,代理自动拦截和检查内容,而用户并不知道代理的存在。

非透明代理:用户配置浏览器使用非透明代理,以便匿名访问互联网。用户在浏览器中手动设置代理服务器地址和端口。

5.X-Forwarded-For,X-Real-IP和Remote Address

X-Forwarded-For通常用于标识通过 HTTP 代理或负载均衡器的原始客户端 IP 地址。X-Forwarded-For 是一个 HTTP 扩展头部。HTTP/1.1(RFC 2616)协议并没有对它的定义,它最开始是由 Squid 这个缓存代理软件引入,用来表示 HTTP 请求端真实 IP。如今它已经成为事实上的标准,被各大 HTTP 代理、负载均衡等转发服务广泛使用,并被写入 RFC 7239(Forwarded HTTP Extension)标准之中。示例值: X-Forwarded-For: client1, proxy1, proxy2

**X-Real-IP **有些反向代理服务器(如 Nginx)会将原始客户端 IP 地址放在这个头字段中。X-Real-IP,这是一个自定义头部字段。X-Real-IP 通常被 HTTP 代理用来表示与它产生 TCP 连接的设备 IP,这个设备可能是其他代理,也可能是真正的请求端。需要注意的是,X-Real-IP 目前并不属于任何标准。示例值:X-Real-IP: 203.0.113.195

Remote Address 是指服务器端看到的客户端的IP地址,即发起请求的源 IP 地址。在网络通信中,remote address 通常用于识别和追踪请求的来源。在不同的代理和负载均衡场景中,remote address 的值可能会发生变化。

参考:聊聊HTTP的X-Forwarded-For 和 X-Real-IP

1.直接访问

如果客户端直接访问服务器而没有经过任何代理或负载均衡器,remote address 将是客户端的 IP 地址。例如,如果客户端的 IP 地址是 203.0.113.195,服务器将看到:

1 | Remote Address: 203.0.113.195 |

2.经过正向代理

当请求经过正向代理(包括透明代理和非透明代理)时,remote address 可能会有所不同:

- 透明代理: 客户端不知道代理的存在,remote address 仍然显示为客户端的 IP 地址。

- 非透明代理: 客户端知道代理的存在并进行配置,remote address 显示为代理服务器的 IP 地址,而原始客户端的 IP 地址通常会添加到 X-Forwarded-For 头字段中(如果代理服务器配置了添加 X-Forwarded-For 头字段的话)。

经过非透明代理的请求:

1 | Remote Address: 198.51.100.1 (代理服务器 IP) |