1.安装request

2.请求网页

下载地址:http://phantomjs.org/download.html

1

2

3

4

| >>> import requests

>>> r = requests.get('https://wwww.baidu.com')

>>> print(r.text)

|

3.请求失败重试

如果请求失败的话,可以使用urllib3的Retry来进行重试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| import requests

from requests.adapters import HTTPAdapter

from urllib3.util import Retry

try:

retry = Retry(

total=5,

backoff_factor=2,

status_forcelist=[429, 500, 502, 503, 504],

)

adapter = HTTPAdapter(max_retries=retry)

session = requests.Session()

session.mount('https://', adapter)

r = session.get('https://httpbin.org/status/502', timeout=180)

print(r.status_code)

except Exception as e:

print(e)

|

参考:https://oxylabs.io/blog/python-requests-retry

4.使用lxml解析网页

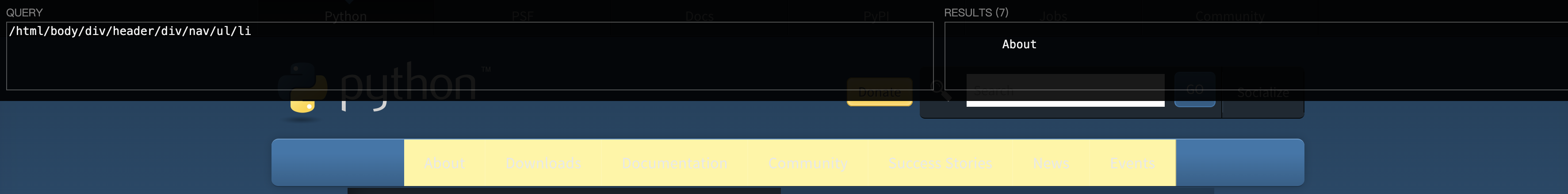

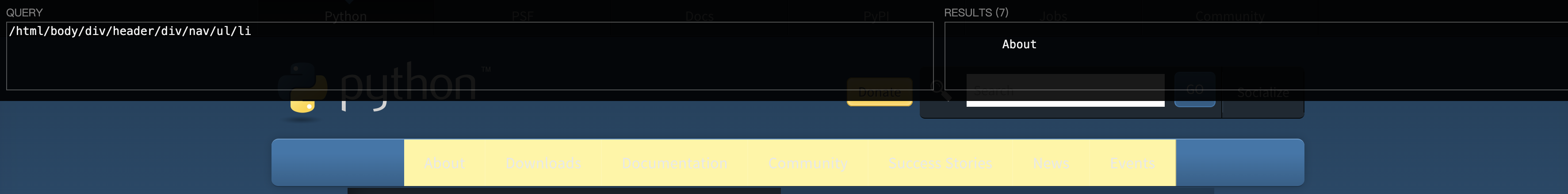

可以安装xpath helper插件来测试xpath,https://chromewebstore.google.com/detail/xpath-helper/hgimnogjllphhhkhlmebbmlgjoejdpjl,使用如下

1

2

3

4

5

6

| from lxml import etree

tree = etree.HTML(r.content)

text = tree.xpath('/html/body/div/header/div/nav/ul/li')

print(text)

|