对比thrift使用TCompactProtocol协议,protobuf使用,以及avro使用AvroKeyOutputFormat格式进行序列化对数据进行序列化后数据量大小

由于thrift的binary数据类型不能再次序列化化成二进制,所以测试的schema中没有binary类型的字段

1.avro schema#

测试数据的avro schema定义如下

1 | { |

2.Thrift schema#

测试数据的thrift schema定义如下

1 | namespace java com.linkedin.haivvreo |

3.protobuf schema#

1 | syntax = "proto3"; |

编译protobuf schema

1 | protoc -I=./ --java_out=src/main/java/ ./src/main/proto3/test_serializer.proto |

4.测试过程#

数据内容如下,使用代码随机生成thrift object

1 | val obj = new test_serializer() |

如下

如果是avro object的话,可以从avro java class生成

1 | val rdd = sc.parallelize(Seq(1,1,1,1,1)) |

也可以从avro schema生成

1 | val rdd = spark.sparkContext.parallelize(Seq(1, 1, 1, 1, 1, 1, 1)) |

spark rdd保存lzo文件

1 | scala> import com.hadoop.compression.lzo.LzopCodec |

spark rdd保存snappy文件

1 | scala> import org.apache.hadoop.io.compress.SnappyCodec |

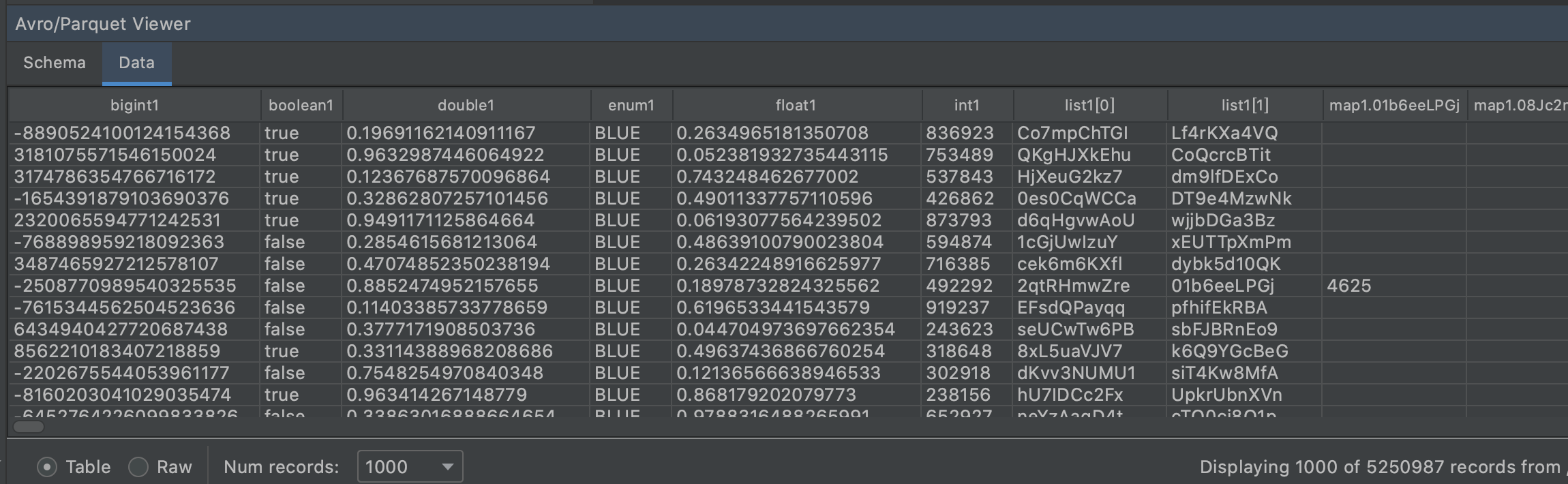

测试数据

| 序列化框架 | 格式 | 压缩/序列化方式 | 数据行数 | 文件数量 | 文件大小 |

|---|---|---|---|---|---|

| avro | AvroKeyOutputFormat | null | 5250987 | 1 | 587.9 MB |

| avro | AvroKeyOutputFormat | SNAPPY | 5250987 | 1 | 453.2 MB |

| avro | AvroParquetOutputFormat | SNAPPY | 5250987 | 1 | 553.7 MB |

| thrift | ParquetThriftOutputFormat | SNAPPY | 5250987 | 1 | 570.5 MB |

| thrift | SequenceFileOutputFormat | TBinaryProtocol | 5250987 | 1 | 1.19 GB |

| thrift | SequenceFileOutputFormat | TCompactProtocol | 5250987 | 1 | 788.7 MB |

| thrift | SequenceFileOutputFormat | TCompactProtocol+DefaultCodec | 5250987 | 1 | 487.1 MB |

| json | textfile | null | 5250987 | 1 | 1.84 GB |

| json | textfile | gzip | 5250987 | 1 | 570.8 MB |

| json | textfile | lzo | 5250987 | 1 | 716MB |

| json | textfile | snappy | 5250987 | 1 | 727M |