hive是使用antlr来解析的

parser要做的事情,是从无结构的字符串里面,解码产生有结构的数据结构(a parser is a function accepting strings as input and returning some structure as output),参考 Parser_combinator wiki

parser分成两种,一种是parser combinator,一种是parser generator,区别可以参考 王垠的文章——对 Parser 的误解

1.parser combinator是需要手写parser,a parser combinator is a higher-order function that accepts several parsers as input and returns a new parser as its output,比如Thrift的Parser

1 | https://github.com/apache/thrift/blob/master/compiler/cpp/src/thrift/main.cc |

2.parser generator是需要你用某种指定的描述语言来表示出语法,然后自动把他们转换成parser的代码,比如Antlr里面的g4语法文件,calcite的ftl语法文件,hue使用的jison以及flex和cup等,缺点是由于代码是生成的,排错比较困难

使用了Antlr的parser有Hive,Presto,Spark SQL

美团点评的文章

1 | https://tech.meituan.com/2014/02/12/hive-sql-to-mapreduce.html |

以及hive源码的测试用例

1 | https://github.com/apache/hive/blob/branch-1.1/ql/src/test/org/apache/hadoop/hive/ql/parse/TestHiveDecimalParse.java |

hive的g4文件如下

老版本的hive

1 | https://github.com/apache/hive/blob/59d8665cba4fe126df026f334d35e5b9885fc42c/parser/src/java/org/apache/hadoop/hive/ql/parse/HiveParser.g |

新版本的hive

1 | https://github.com/apache/hive/blob/master/hplsql/src/main/antlr4/org/apache/hive/hplsql/Hplsql.g4 |

spark的g4文件如下

1 | https://github.com/apache/spark/blob/master/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4 |

Presto的g4文件如下

1 | https://github.com/prestodb/presto/blob/master/presto-parser/src/main/antlr4/com/facebook/presto/sql/parser/SqlBase.g4 |

confluent的kSql的g4文件

1 | https://github.com/confluentinc/ksql/blob/master/ksqldb-parser/src/main/antlr4/io/confluent/ksql/parser/SqlBase.g4 |

使用了Apache Calcite的parser有Apache Flink,Mybatis,Apache Storm等

Flink的ftl文件如下

1 | https://github.com/apache/flink/blob/master/flink-table/flink-sql-parser/src/main/codegen/includes/parserImpls.ftl |

Mybatis的mapper模板生成

1 | https://github.com/abel533/Mapper/blob/master/generator/src/main/resources/generator/mapper.ftl |

Storm的ftl文件如下

1 | https://github.com/apache/storm/blob/master/sql/storm-sql-core/src/codegen/includes/parserImpls.ftl |

以及使用了flex和cup的impala,如何使用impala的parser来解析query可以参考另一篇文章:使用Impala parser解析SQL

parser的测试用例

1 | https://github.com/cloudera/Impala/blob/master/fe/src/test/java/com/cloudera/impala/analysis/ParserTest.java |

源码

1 | https://github.com/apache/impala/blob/master/fe/src/main/jflex/sql-scanner.flex |

和

1 | https://github.com/apache/impala/blob/master/fe/src/main/cup/sql-parser.cup |

impala也用了少量的antlr

1 | https://github.com/apache/impala/blob/master/fe/src/main/java/org/apache/impala/analysis/ToSqlUtils.java |

还有hue使用的jison,jison是JavaScript语言的语法分析器

1 | https://github.com/cloudera/hue/tree/master/desktop/core/src/desktop/js/parse/jison |

以hive的Hplsql.g4为例,来解析一句sql

1 | antlr4 Hplsql.g4 |

解析select语句

1 | grun Hplsql r -tokens |

可以看到打印出token流

解析建表语句

1 | grun Hplsql r -tokens |

上面介绍了antlr如果解析hive语句,而在hive中使用的就是由antlr编译出来的java代码来解析hive语句

接下来介绍如何使用java代码解析hive语句,首先引用依赖

1 | <dependency> |

代码

1 | import com.google.common.collect.Lists; |

测试用例,解析了hive的建表语句

1 | import org.junit.Test; |

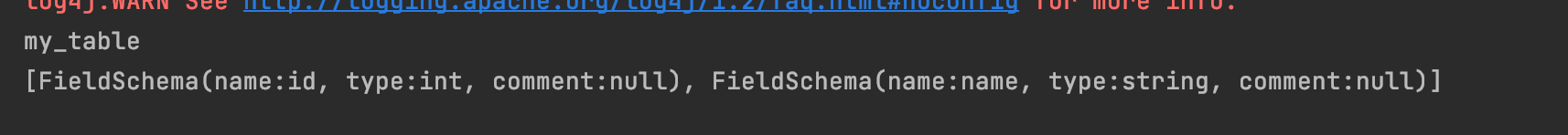

输出

上面例子中是将hive表名和字段解析出来,其他属性也可以使用类似的方法从语法树中取出