1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

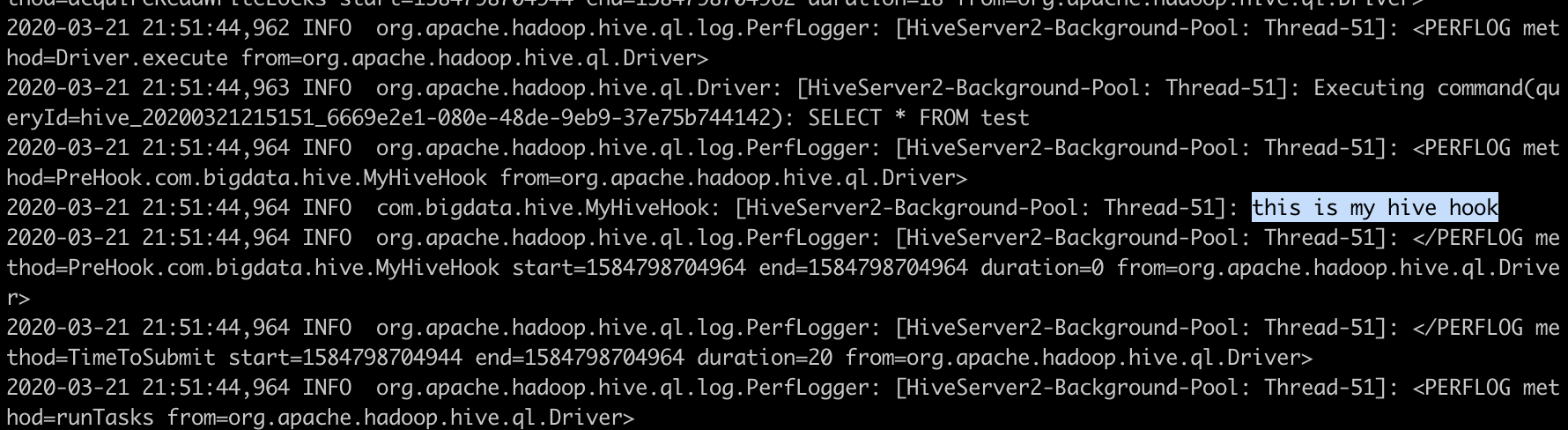

| 2020-03-28 14:19:12,912 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: </PERFLOG method=compile start=1585376352892 end=1585376352912 duration=20 from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,912 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Handler-Pool: Thread-39]: Completed compiling command(queryId=hive_20200328141919_d2739f08-478e-4f95-949b-e0bd176e4eab); Time taken: 0.02 seconds

2020-03-28 14:19:12,913 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=Driver.run from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,913 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=TimeToSubmit from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,913 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=acquireReadWriteLocks from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,922 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: </PERFLOG method=acquireReadWriteLocks start=1585376352913 end=1585376352922 duration=9 from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,922 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=Driver.execute from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,922 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Background-Pool: Thread-79]: Executing command(queryId=hive_20200328141919_d2739f08-478e-4f95-949b-e0bd176e4eab): ALTER TABLE test1 CHANGE id id String COMMENT "test"

2020-03-28 14:19:12,923 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,923 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Query executed: ALTER TABLE test1 CHANGE id id String COMMENT "test"

2020-03-28 14:19:12,923 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Operation: ALTERTABLE_RENAMECOL

2020-03-28 14:19:12,923 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Monitored Operation

2020-03-28 14:19:12,928 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Hook metadata input value: {"lastAccessTime":0,"ownerType":"USER","parameters":{"last_modified_time":"1585376257","totalSize":"0","numRows":"-1","rawDataSize":"-1","COLUMN_STATS_ACCURATE":"false","numFiles":"0","transient_lastDdlTime":"1585376257","last_modified_by":"hive"},"owner":"hive","tableName":"test1","dbName":"default","tableType":"MANAGED_TABLE","privileges":null,"sd":{"location":"hdfs://master:8020/user/hive/warehouse/test","parameters":{},"inputFormat":"org.apache.hadoop.mapred.TextInputFormat","outputFormat":"org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat","compressed":false,"numBuckets":-1,"sortCols":[],"cols":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"colsSize":2,"serdeInfo":{"setSerializationLib":true,"name":null,"parameters":{"serialization.format":"1"},"serializationLib":"org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe","parametersSize":1,"setParameters":true,"setName":false},"skewedInfo":{"skewedColNamesSize":0,"skewedColNamesIterator":[],"setSkewedColNames":true,"skewedColValuesSize":0,"skewedColValuesIterator":[],"setSkewedColValues":true,"skewedColValueLocationMapsSize":0,"setSkewedColValueLocationMaps":true,"skewedColValueLocationMaps":{},"skewedColNames":[],"skewedColValues":[]},"bucketCols":[],"parametersSize":0,"setParameters":true,"colsIterator":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"setCols":true,"setLocation":true,"setInputFormat":true,"setOutputFormat":true,"setCompressed":true,"setNumBuckets":true,"setSerdeInfo":true,"bucketColsSize":0,"bucketColsIterator":[],"setBucketCols":true,"sortColsSize":0,"sortColsIterator":[],"setSortCols":true,"setSkewedInfo":true,"storedAsSubDirectories":false,"setStoredAsSubDirectories":true},"temporary":false,"partitionKeys":[],"setTableName":true,"setOwner":true,"retention":0,"setRetention":true,"partitionKeysSize":0,"partitionKeysIterator":[],"setPartitionKeys":true,"viewOriginalText":null,"setViewOriginalText":false,"viewExpandedText":null,"setViewExpandedText":false,"setTableType":true,"setPrivileges":false,"setTemporary":false,"setOwnerType":true,"setDbName":true,"createTime":1584893296,"setCreateTime":true,"setLastAccessTime":true,"setSd":true,"parametersSize":8,"setParameters":true}

2020-03-28 14:19:12,935 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Hook metadata output value: {"lastAccessTime":0,"ownerType":"USER","parameters":{"last_modified_time":"1585376257","totalSize":"0","numRows":"-1","rawDataSize":"-1","COLUMN_STATS_ACCURATE":"false","numFiles":"0","transient_lastDdlTime":"1585376257","last_modified_by":"hive"},"owner":"hive","tableName":"test1","dbName":"default","tableType":"MANAGED_TABLE","privileges":null,"sd":{"location":"hdfs://master:8020/user/hive/warehouse/test","parameters":{},"inputFormat":"org.apache.hadoop.mapred.TextInputFormat","outputFormat":"org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat","compressed":false,"numBuckets":-1,"sortCols":[],"cols":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"colsSize":2,"serdeInfo":{"setSerializationLib":true,"name":null,"parameters":{"serialization.format":"1"},"serializationLib":"org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe","parametersSize":1,"setParameters":true,"setName":false},"skewedInfo":{"skewedColNamesSize":0,"skewedColNamesIterator":[],"setSkewedColNames":true,"skewedColValuesSize":0,"skewedColValuesIterator":[],"setSkewedColValues":true,"skewedColValueLocationMapsSize":0,"setSkewedColValueLocationMaps":true,"skewedColValueLocationMaps":{},"skewedColNames":[],"skewedColValues":[]},"bucketCols":[],"parametersSize":0,"setParameters":true,"colsIterator":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"setCols":true,"setLocation":true,"setInputFormat":true,"setOutputFormat":true,"setCompressed":true,"setNumBuckets":true,"setSerdeInfo":true,"bucketColsSize":0,"bucketColsIterator":[],"setBucketCols":true,"sortColsSize":0,"sortColsIterator":[],"setSortCols":true,"setSkewedInfo":true,"storedAsSubDirectories":false,"setStoredAsSubDirectories":true},"temporary":false,"partitionKeys":[],"setTableName":true,"setOwner":true,"retention":0,"setRetention":true,"partitionKeysSize":0,"partitionKeysIterator":[],"setPartitionKeys":true,"viewOriginalText":null,"setViewOriginalText":false,"viewExpandedText":null,"setViewExpandedText":false,"setTableType":true,"setPrivileges":false,"setTemporary":false,"setOwnerType":true,"setDbName":true,"createTime":1584893296,"setCreateTime":true,"setLastAccessTime":true,"setSd":true,"parametersSize":8,"setParameters":true}

|