前置条件是安装ik分词,请参考

1.在ik分词的config下添加词库文件

1 | ~/software/apache/elasticsearch-6.2.4/config/analysis-ik$ ls | grep mydic.dic |

内容为

1 | 我给祖国献石油 |

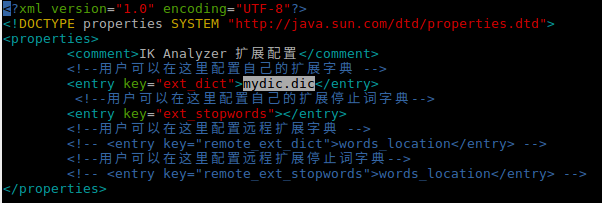

2.配置词库路径,编辑IKAnalyzer.cfg.xml配置文件,添加新增的词库

3.重启es

4.测试

data.json

1 | { |

添加之后的ik分词结果

1 | curl -H 'Content-Type: application/json' http://localhost:9200/_analyze?pretty=true -d@data.json |

添加之后的ik分词结果,分词结果的tokens中增加了 “我给祖国献石油”

1 | curl -H 'Content-Type: application/json' http://localhost:9200/_analyze?pretty=true -d@data.json |